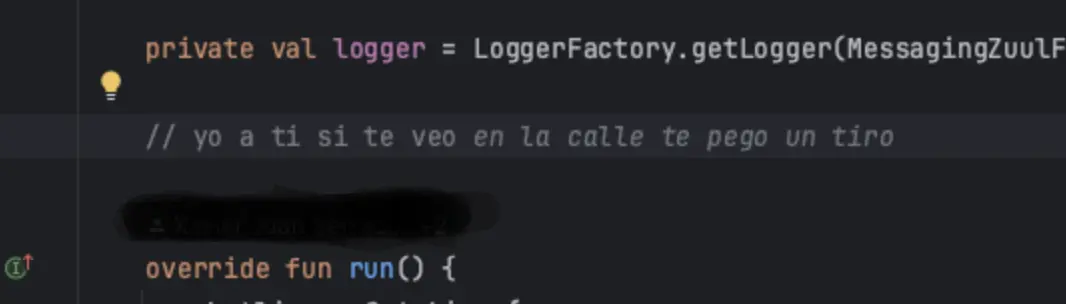

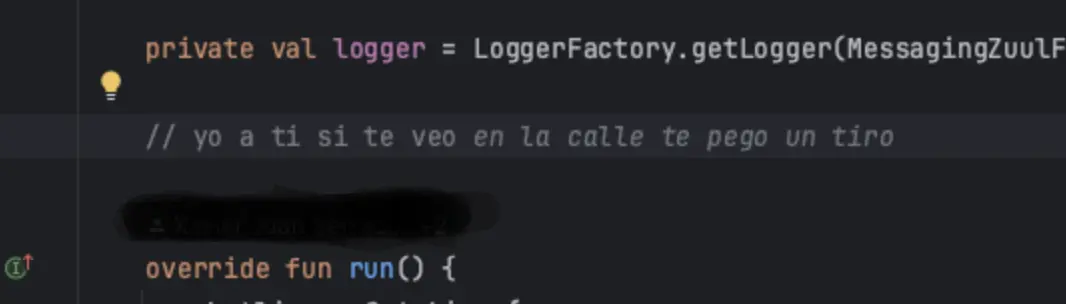

A couple of years old, but in the early days they didn't care about sanitizing non-English content. Leading to pearls like this:

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

A couple of years old, but in the early days they didn't care about sanitizing non-English content. Leading to pearls like this:

“If I see you on the street, I'll shoot you.”

Nothing like casual death threats from your friendly local IDE AI assistant.

Thanks, I had everything but "pego".

"I'll hit you with"/"I'll stick to you"

Yeah, it's a very informal way of saying it.

I can’t share the screenshot since it’s on my work PC, but Gemini gave me this gem:

Asked to summarize some product data with a size field:

“One size fits all (OSFA?) :) just kidding… One Size is a good size, you know… like ;) ..anyway, I’m done, seriously :) okay bye.. :D .lol..kthxbai,kthxbai,kthxbai,” and just kept repeating kthxbai until the context filled and died, and of course we got billed for all those tokens.

So, you gonna ask her out?

I have another screenshot where it just says "*Giggling*" so maybe

giggity

Sounds like Copilot pulled their programming socks WAY UP that day

This is pretty cool. Is there a way to enable this? I'd like some dark humor in my code base.

Mine kept "thinking" in comments...

// Figure out what the user is requesting, then send an appropriate response.

Even I don't know what I'm thinking half the time.

This is hilariously autistic sounding for a machine.

Qwen3, 8B BTW.

It was a hilarious bug. XD. It was running in assistant/chat mode, while I was using it for embedding.

Well. Do they? Maybe write a base class you can extend to cock, balls, breast, dildo that can work with objects mouth, asshole, and pussy.

Get to work OP. The world needs this.

Classic OOP bloat.

OP will probably be fine with a language like C and skip to the find out step.

as the sying goes "brainfuck around and find out"

Dose? Dose what? Dose medicine, water, flour?

Dose nutz

HA! GOTEM!

No, dose nuts fit in your mouth?

I was using phoenix and elixir right when Chat gpt came out and people were like "it'll take our jerb".

I tried to get it to build a basic module that built a playing deck of cards. At first it looked OK, the basic layout made sense, but then I realised it called functions that weren't there, some functions were just empty, since logic was wrong and actually it was all around terrible.

I tried to fix it with prompts and it got worse or implemented my suggestions incorrectly and was still broken.

Ultimately it took a lot longer to get no where than if I'd just written it by myself. But I could see how someone with not much knowledge in the area could see the output and be impressed.

Oh yeah Copilot just spirals deeper into insanity the more you use it.

It'll sometimes spit your own code back at you and say "there, I fixed it", and it does the same when you point it out ad vitam aeternam. I believe this is a case of hardcore over fitting the original prompt.

I recall saying something like "the function 'draw_card' doesn't mutate the deck variable" and it goes "I'm sorry, you're correct. I'll fix it to mutate the deck variable" and it returns the same code but changes the the card variable inside function to be called "mutate_the_deck".

I felt much safer after that interaction.

Future technician trying to solve a problem by reading the machine log: What. The. Fuck?

So you're saying your AI knows your misspelling habits well?

*you're misspelling habits

When the logger starts to ask real questions