It’s just asking it m to find sources from excerpts. I don’t think this is something they have been trained on with much emphasis is it?

Fuck AI

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

Yeah, this isn't a general test of "factualness", it's a very narrow and specific subset of capability that many of these AIs were not designed for.

That being said, it does not change my already poor opinion of generative AI.

Is the plan for AI to give tech plausible deniablity when it lies about politics and other mis/dis information?

AI has its uses: I would love to read AI written books in fantasy games (instead of the 4 page books we currently have) or talk to a AI in the next RPG game, hell it might even make better random generated quests and such things.

You know, places where hallucinations don't matter.

AI as a search engine only makes sense when/if they ever find a way to avoid hallucinations.

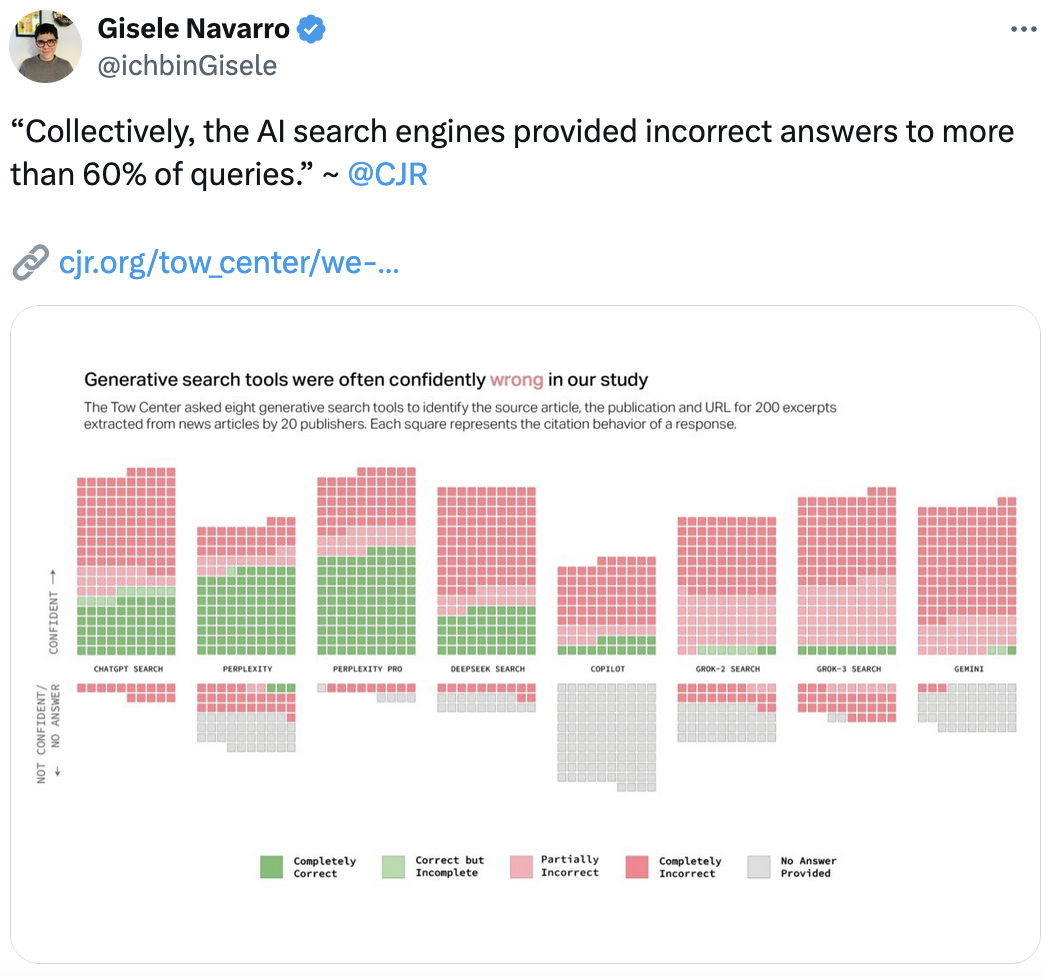

Go figure, the one providing sources for answers was the most correct...But pretty wild how it basically leaves the others in the dust!

That's probably why I end up arguing with Gemini. It's constantly lying.

AI can be a load of shite but I’ve used it to great success with the Windows keyboard shortcut while I’m playing a game and I’m stuck or want to check something.

Kinda dumb but the act of not having to alt-tab out of the game has actually increased my enjoyment of the hobby.

I mean, the tech is changing faster than science can analyize it, but isnt this now outdated?

I dont use AI but a friend showed me a query that returned the sources, most of which were academic and appeared trustworthy

I like how when you go pro with perplexity, all you get is more wrong answers

That's not true, it looks like it does improve. More correct and so-so answers.