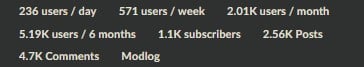

Community statistics are available from the Lemmy API. For example, this URL returns the info for this community: https://lemm.ee/api/v3/community?name=fedigrow

The community statistics are listed in the returned data under counts:.

The API only returns current numbers, though. To create a time series you need to write a script to grab the community statistics on a regular basis and store the results.